Home

Welcome to Ivy's website

Empowering the World with My Data Analysis and Data Engineering Skills

“If you don't produce, you won't thrive - no matter how skilled or talented you are.”

- Cal Newport, Deep Work: Rules for Focused Success in a Distracted World

I am Ivy, a combination of healthcare and data background, am looking for a Data Engineer / Data Analyst position in the UK. Any help will be highly appreciated! I am adept at SQL, Python, and Data Visualisation, I am a certified Azure Data Engineer and GCP Data Engineer, have experience handling large datasets (one published dataset link) and love building data pipelines with Docker and Airflow.

- 3 years, when I studied in a lab, A/B testing to validate my hypothesis

- 2 years, when I worked in a biotech startup, I managed databases, prepared datasets

- 1 year, when I studied Business Analytics, I converted data into business insights

- 2 years, when I self-trained myself, I kept learning data engineering tools

“If you don't produce, you won't thrive - no matter how skilled or talented you are.”

- Cal Newport, Deep Work: Rules for Focused Success in a Distracted World

I am Ivy, a combination of healthcare and data background, am looking for a Data Engineer / Data Analyst position in the UK. Any help will be highly appreciated! I am adept at SQL, Python, and Data Visualisation, I am a certified Azure Data Engineer and GCP Data Engineer, have experience handling large datasets (one published dataset link) and love building data pipelines with Docker and Airflow.

- 3 years, when I studied in a lab, A/B testing to validate my hypothesis

- 2 years, when I worked in a biotech startup, I managed databases, prepared datasets

- 1 year, when I studied Business Analytics, I converted data into business insights

- 2 years, when I self-trained myself, I kept learning data engineering tools

“If you don't produce, you won't thrive - no matter how skilled or talented you are.”

- Cal Newport, Deep Work: Rules for Focused Success in a Distracted World

I am Ivy, a combination of healthcare and data background, am looking for a Data Engineer / Data Analyst position in the UK. Any help will be highly appreciated! I am adept at SQL, Python, and Data Visualisation, I am a certified Azure Data Engineer and GCP Data Engineer, have experience handling large datasets (one published dataset link) and love building data pipelines with Docker and Airflow.

- 3 years, when I studied in a lab, A/B testing to validate my hypothesis

- 2 years, when I worked in a biotech startup, I managed databases, prepared datasets

- 1 year, when I studied Business Analytics, I converted data into business insights

- 2 years, when I self-trained myself, I kept learning data engineering tools

Data Analysis Projects

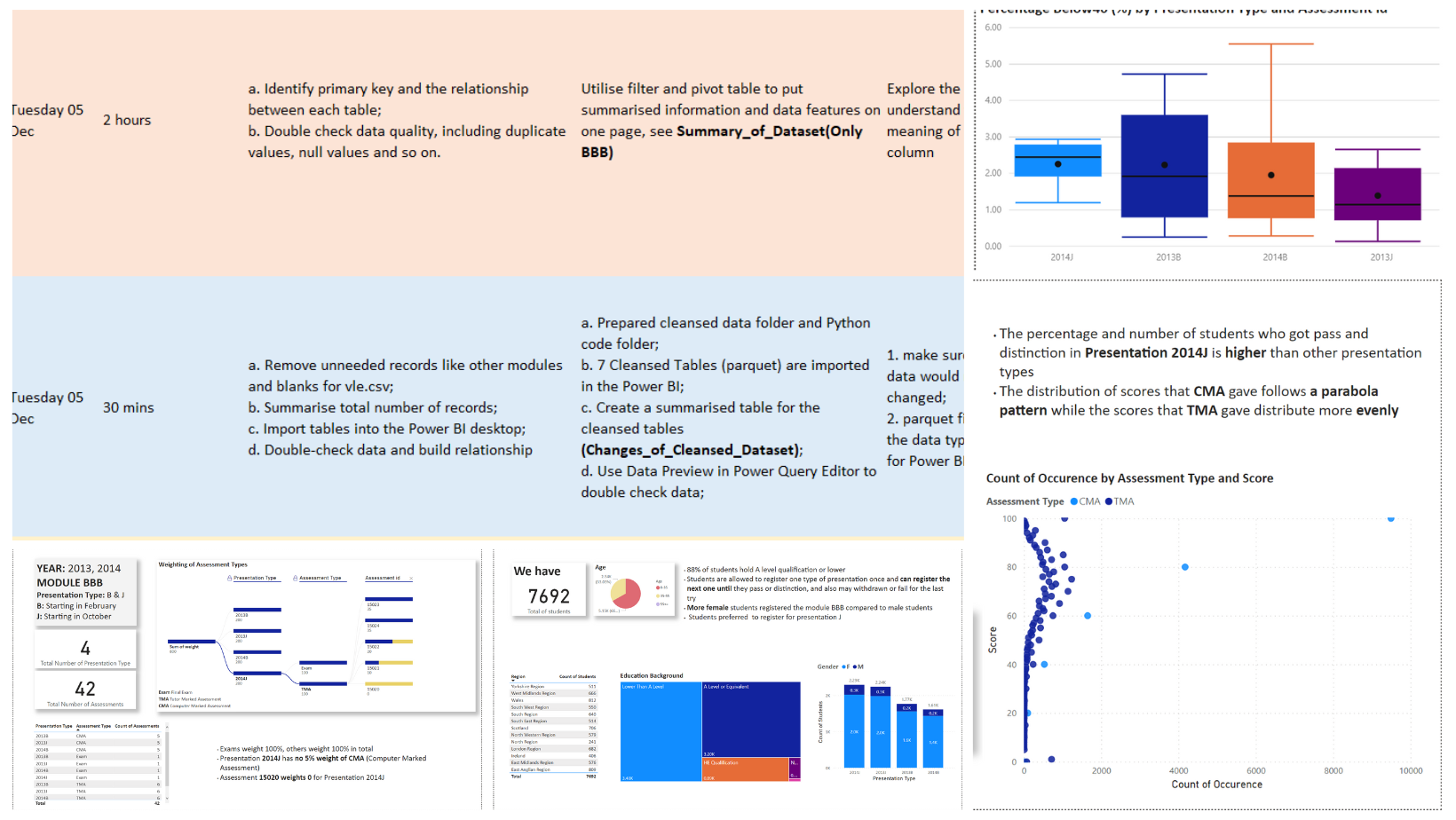

Education-Focused Analysis: How Assessment Types Shape the Final Result

I used Power BI to explore the reasons why the mix and weightings of assessment types shaped the final result

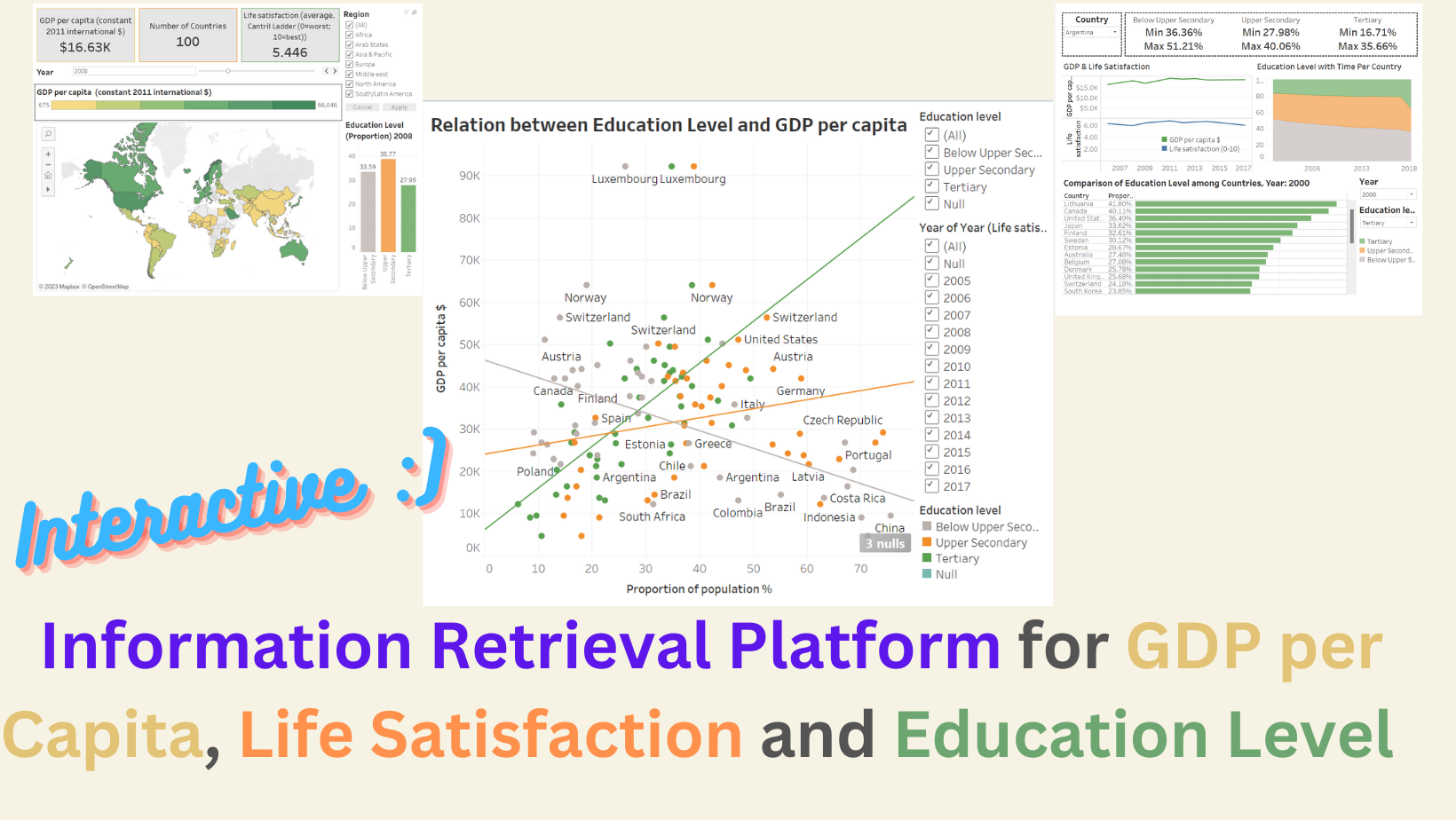

A self-service platform for GDP, Life Satisfaction and Education Level

I used Tableau to build a self-service platform including information about GDP, Life Satisfaction and Education Level

.png)

BT Customer Churn Influencer

I used Power BI to visualise the features of churn customers in BT and used Python and logistic regression to calculate the key churn influencers.

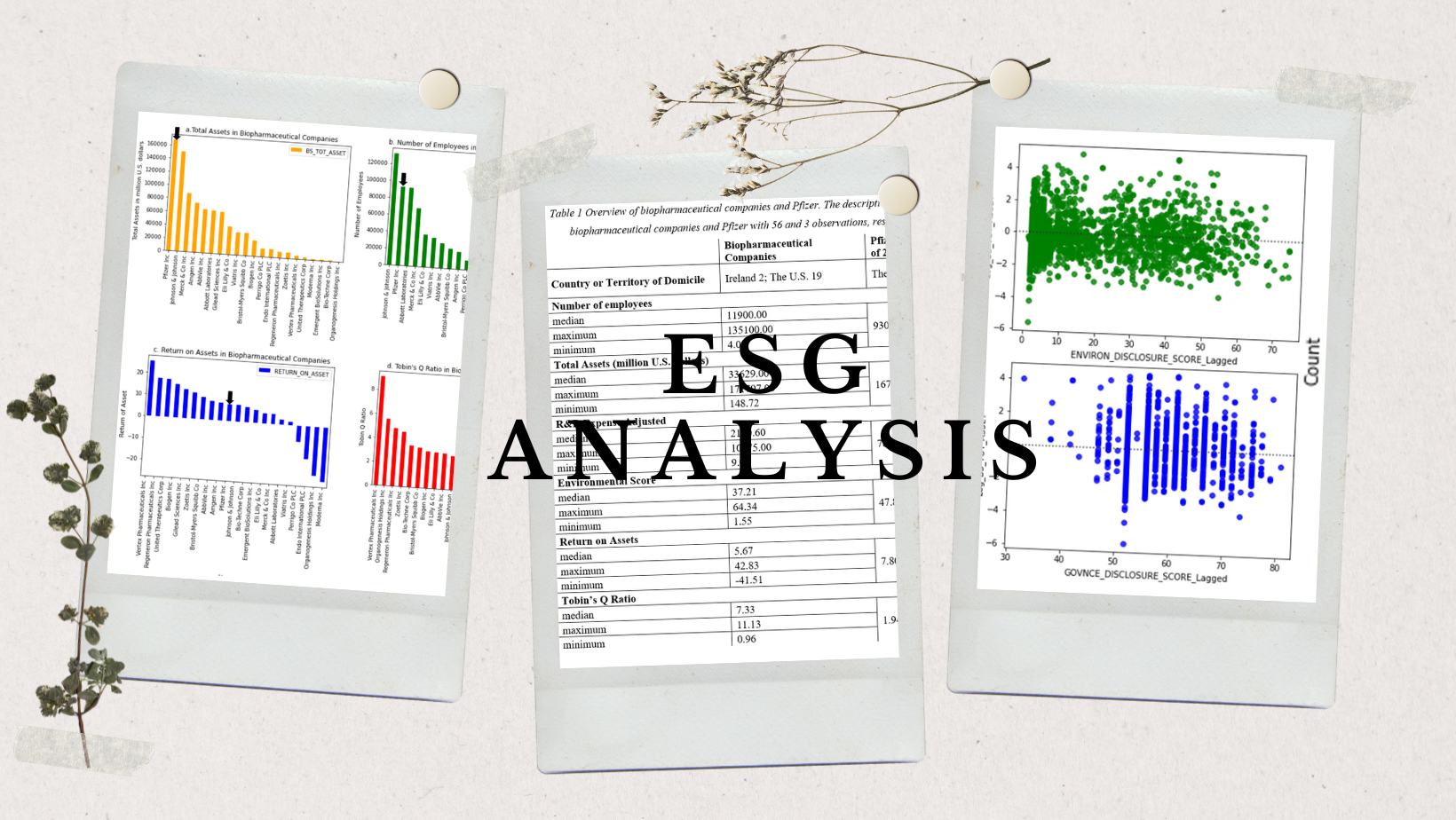

ESG Analysis for Pfizer

I used Python to analyse the position of Pfizer in the pharm industry and linear regression to quantify the relationship between ESG scores and total assets

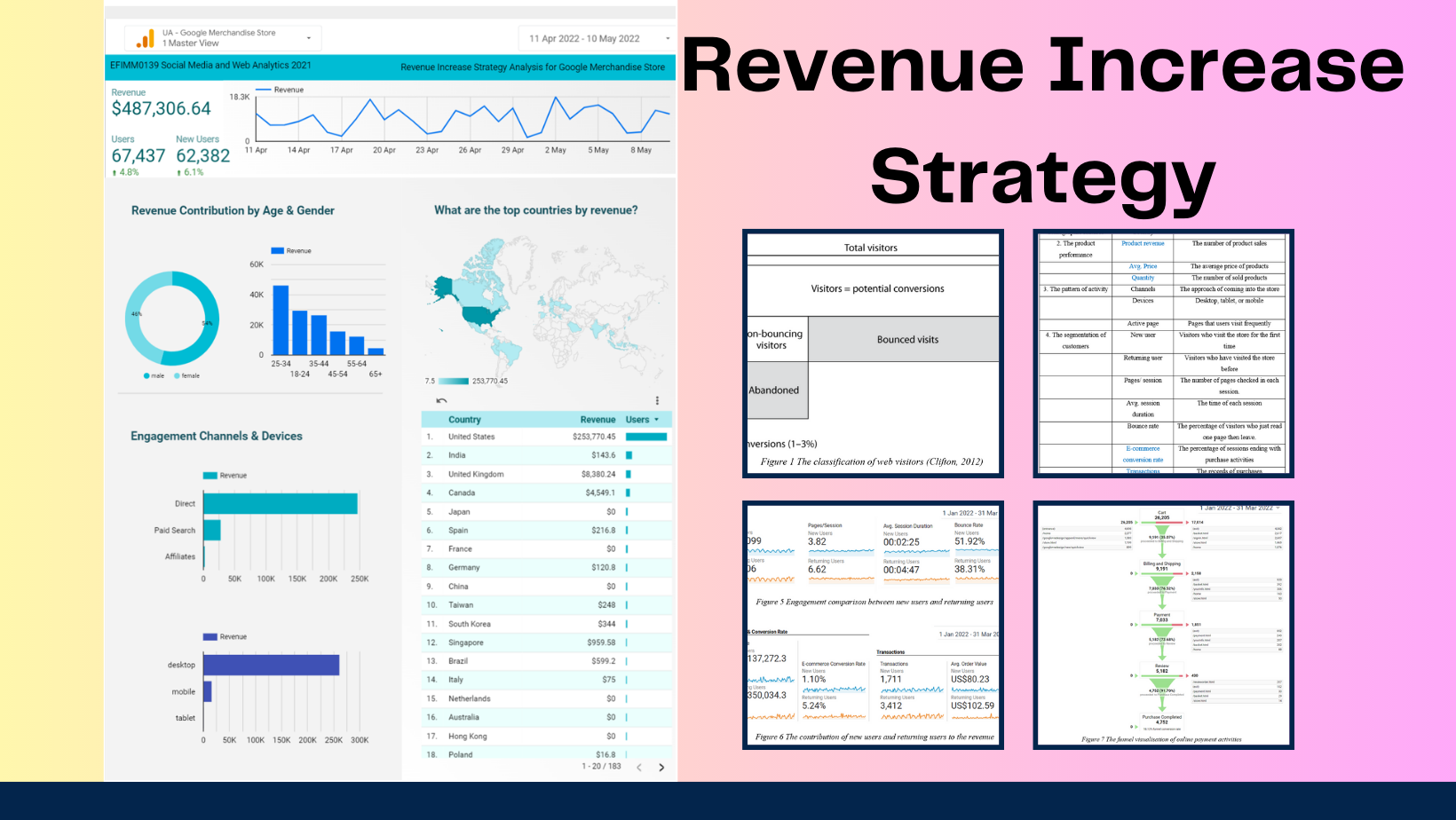

Revenue increase strategy analysis for Google merchandise store

I used Google Analytics and Looker studio to segement customers and analyse customer behaviour

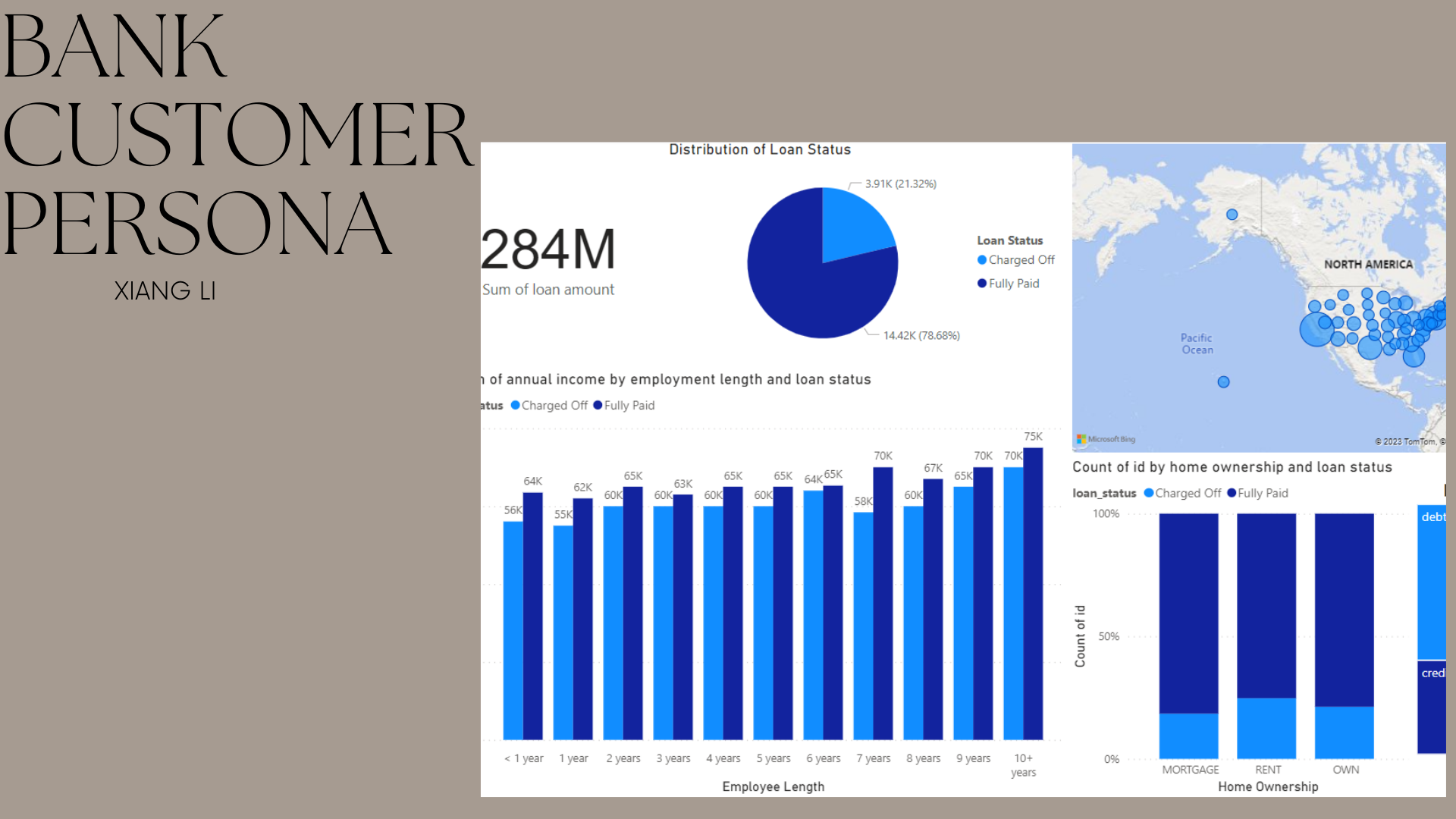

Lloyds Bank Customer Profiling

I used Power BI to profile customers for Lloyds Bank

Database Projects

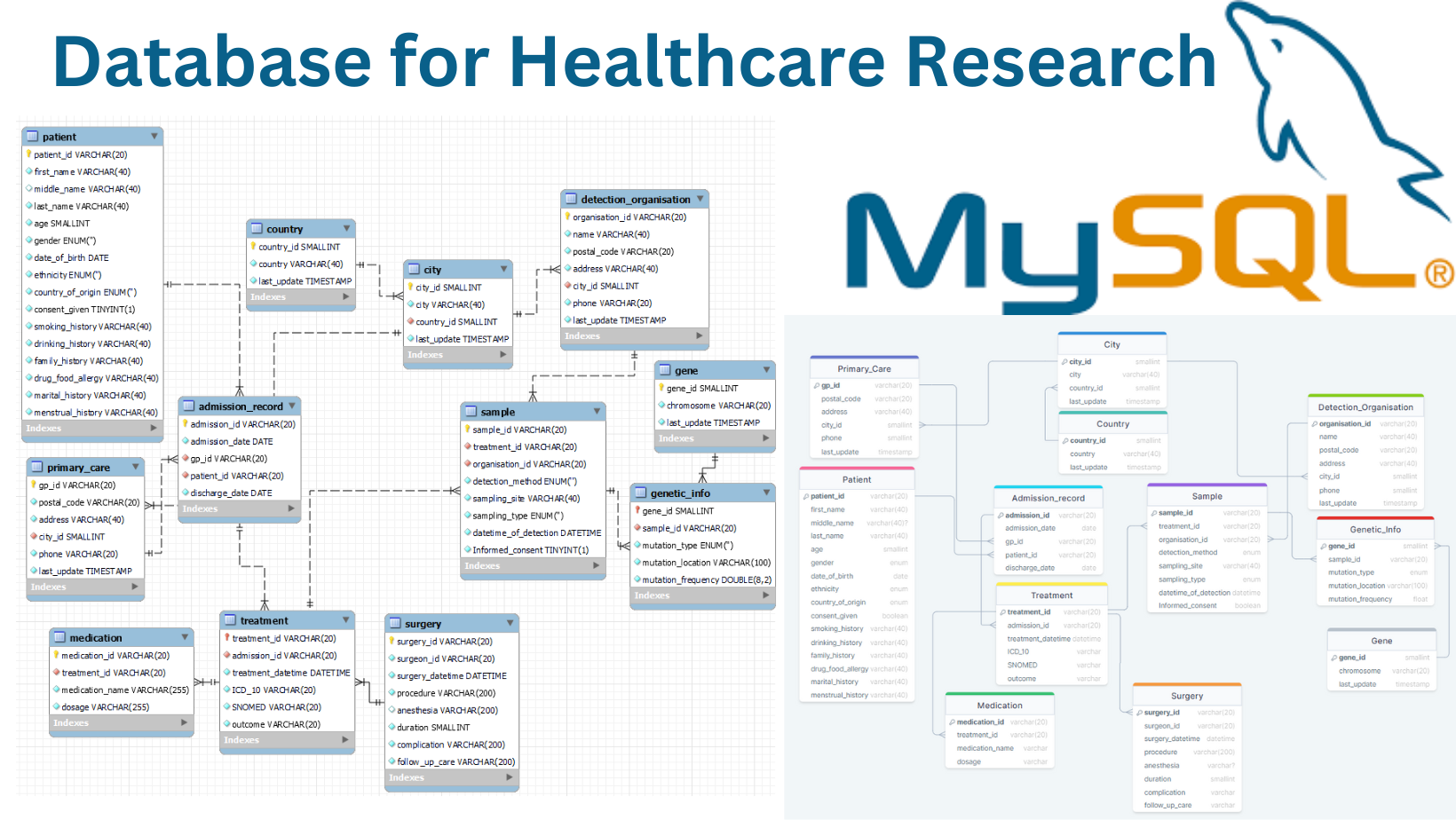

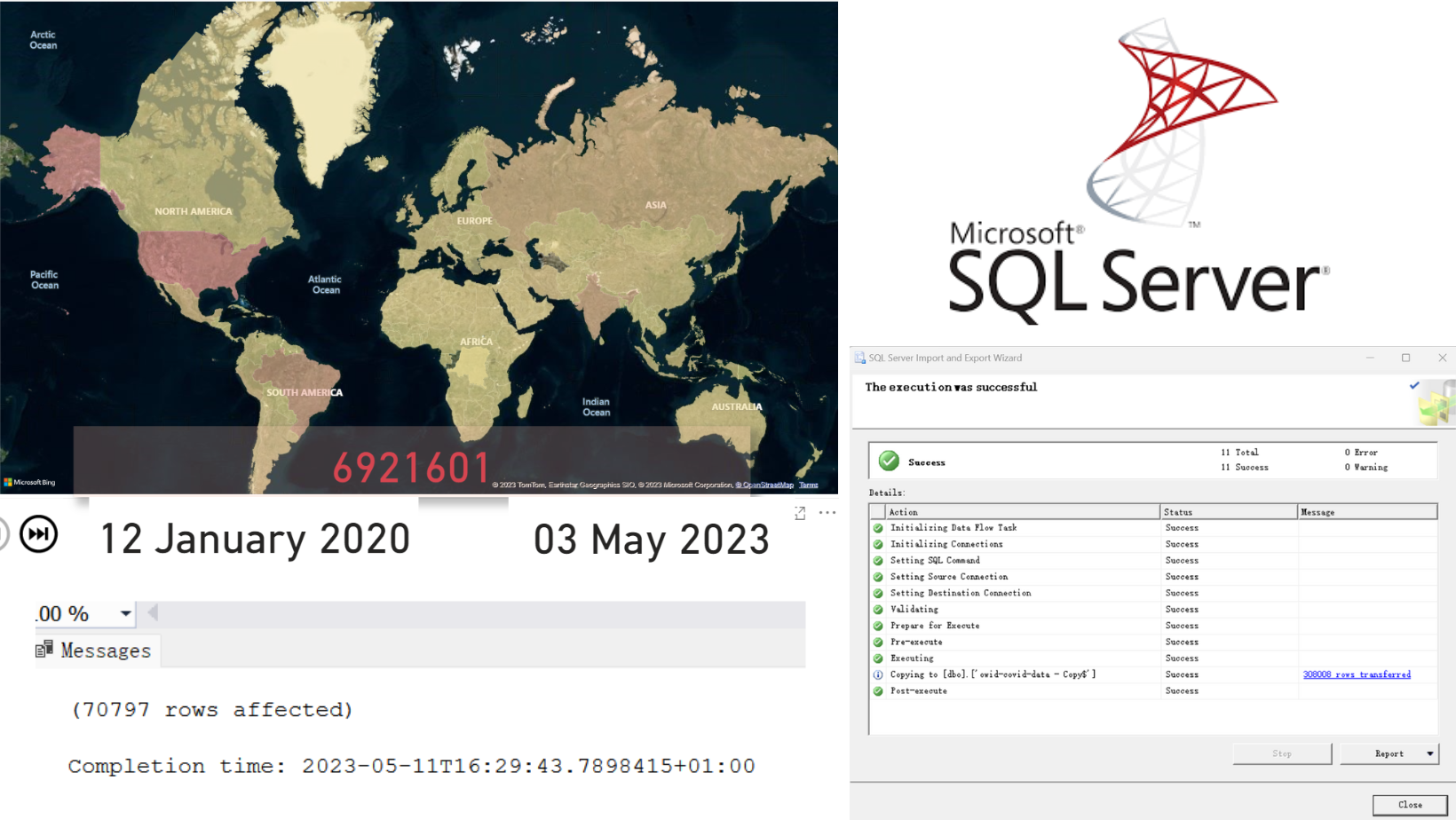

Data Platform Design for Healthcare Research

I used MySQL to create 11 tables for normalisation of clinical data and genetic data. The work is designed for healthcare research.

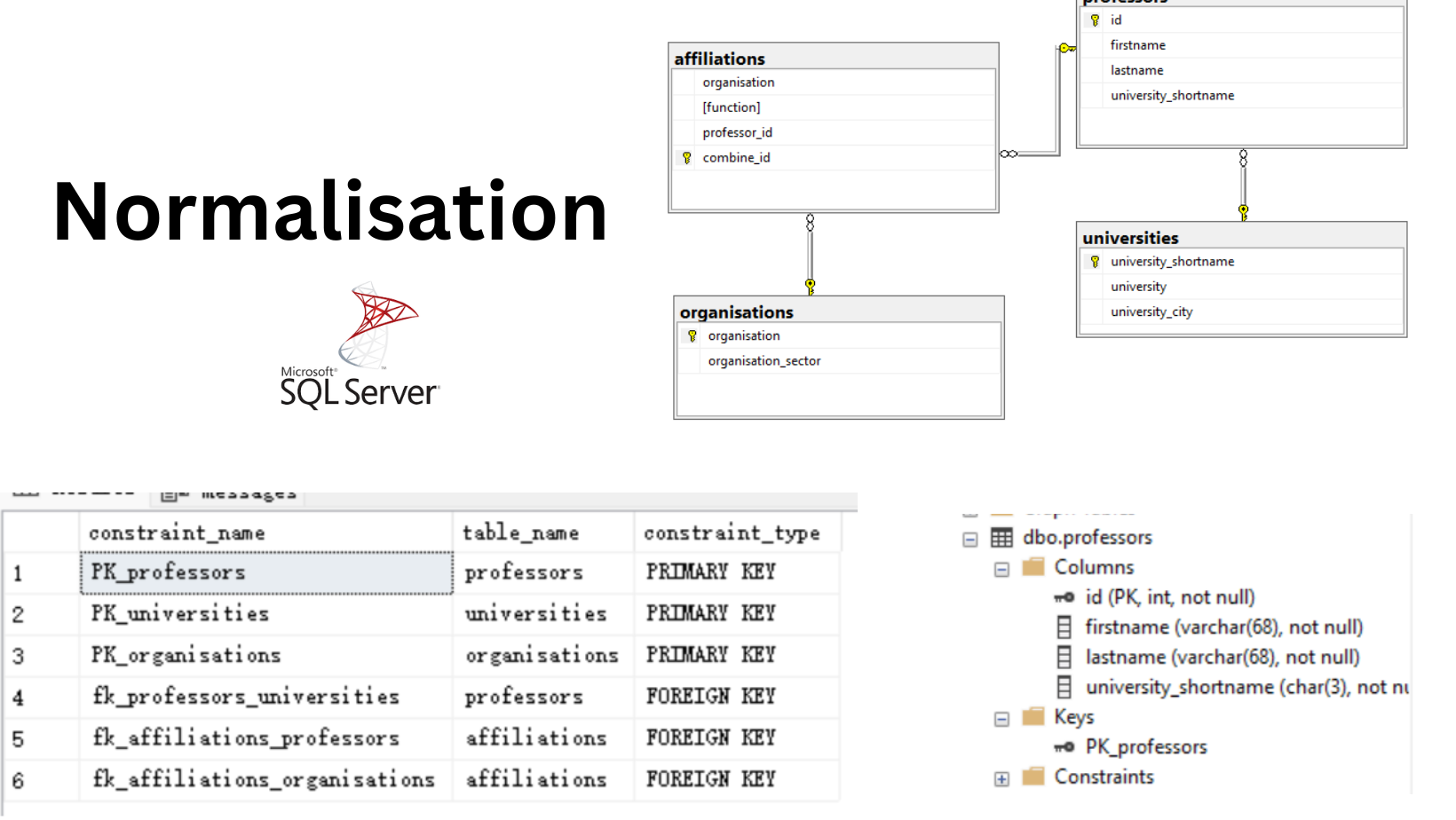

Normalisation for professors in organisations with SQL Server

I used SQL Server to normalise a informative table. The project focused on details, like primary key, surrogate key, relationship, ON DELETE NO ACTION and so on.

Small Projects

.png)

5 Tips to Store an online zip file locally(Python)

In my first blog, I talked about how to create a more structured directory technically, including f-strings, os module, requests and ZipFile library.

3 Steps to Clean Data in SQL Server

I change my on-premise SQL tool to SQL Server now. I prepared clean data for data visualisation, there are 3 main steps to do.

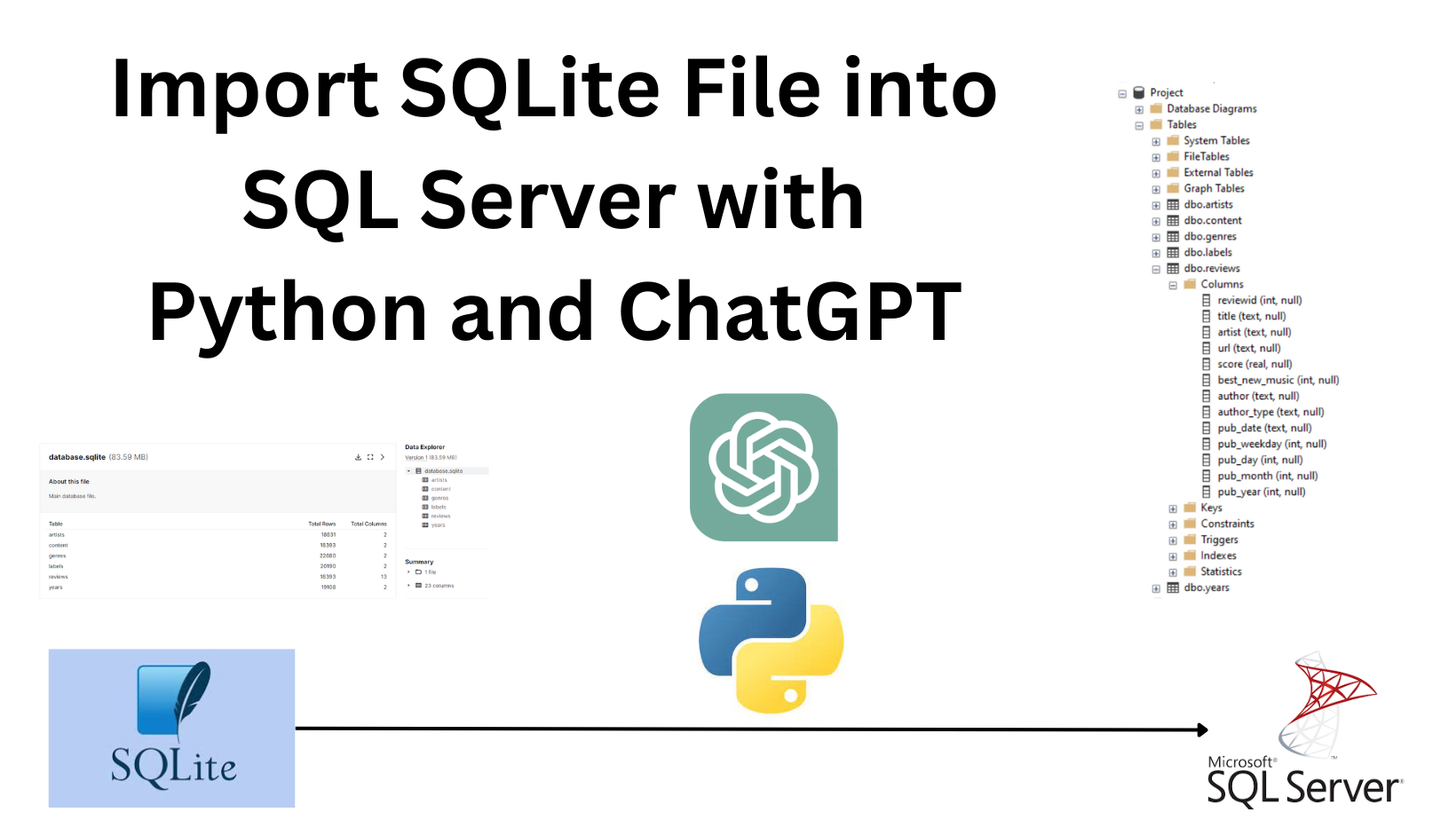

Import SQLite File into SQL Server with Python and ChatGPT

I learn how to use ChatGPT help achieve the connection between SQLite file and SQL Server with Python。

Mini Blogs for Data Analysis Tools

Around 200 to 300 words for an overview

SQL

Introduction

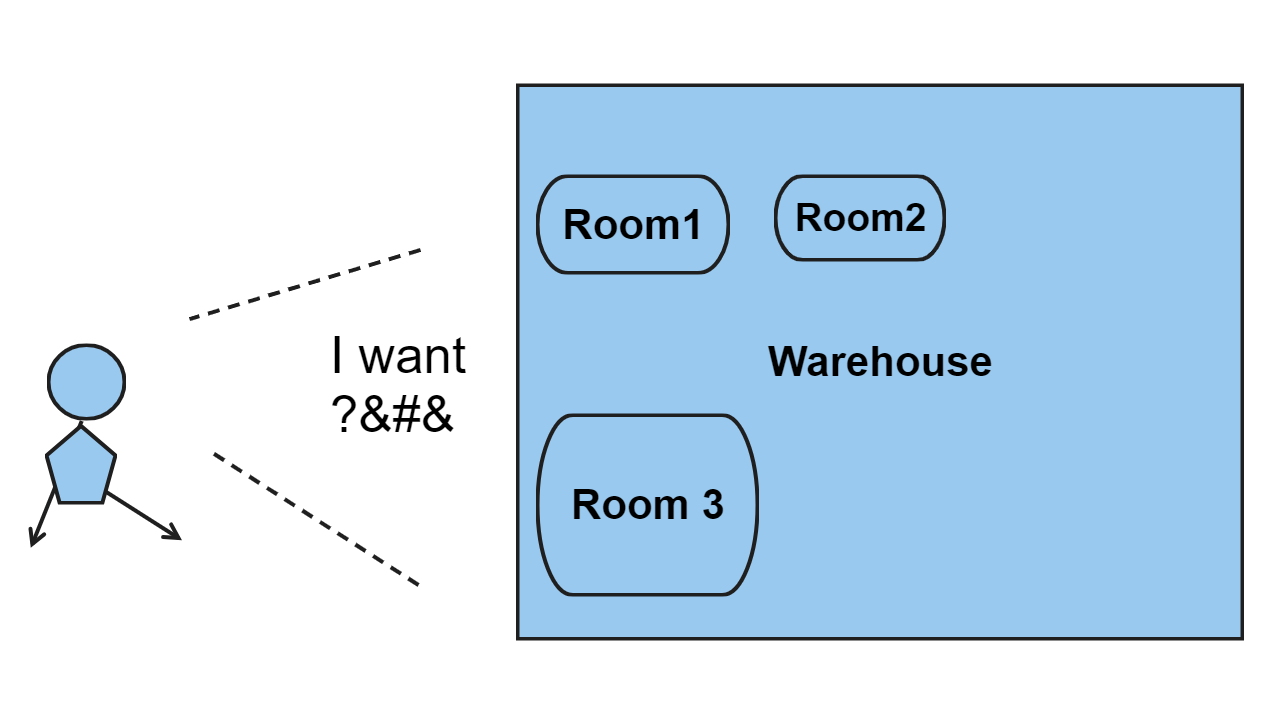

😉Using a simile, SQL is the design of warehouses, and the initial step of the supply chain between the organised warehouse and displayed shops.

💁♀️ So, how does SQL work? Becoming organised (Data Definition, Data Manipulation, Transaction Control, and Data Control) and then choosing the exact products among millions of stored products (Data Query) is what SQL do.

🤓 I need to understand more details about the 5 categories.

Data Definition: design a storage unit with detailed requirements. Each database has some tables, each table has some columns with a certain maximum capacity that holds one data type (e.g., VARCHAR(size), INTEGER(size)). CREATE, INDEX, ALTER, DROP, TRUNCATE, RENAME

Data Management: populate data in the tables. Load the data delivered from the production, add more with time, and replace the unqualified data. The process is dynamic. INSERT, UPDATE, DELETE

Transaction Control: some repetitive tasks can be put together for efficiency and withdrawn in case of errors. COMMIT, ROLLBACK, SAVEPOINT

Data Control: permission control for safety. Only specified users can access the database. GRANT, REVOKE

Data Query: choose data with filters, adjustments, and simple mathematics. a. Select information for a specific date, people, or region. SELECT, DISTINCT, WHERE, IN, LIKE; b. Some tables have relationships and can be merged. JOIN, UNION, INTERSECT, EXCEPT; c. Aggregate data in groups. GROUP BY, SUM, MAX; d. Adjust raw columns for a new format. CONCAT, DATEADD, LENGTH, SUBSTRING; d. Conditional output. CASE WHEN, IS NULL and so on.

😚 In short, database administrators are responsible for the database design and management while data analysts find the right data using the last query language. Now that I know what SQL does and how it works, I can practice getting data using these functions.

Query Language

Functional Language

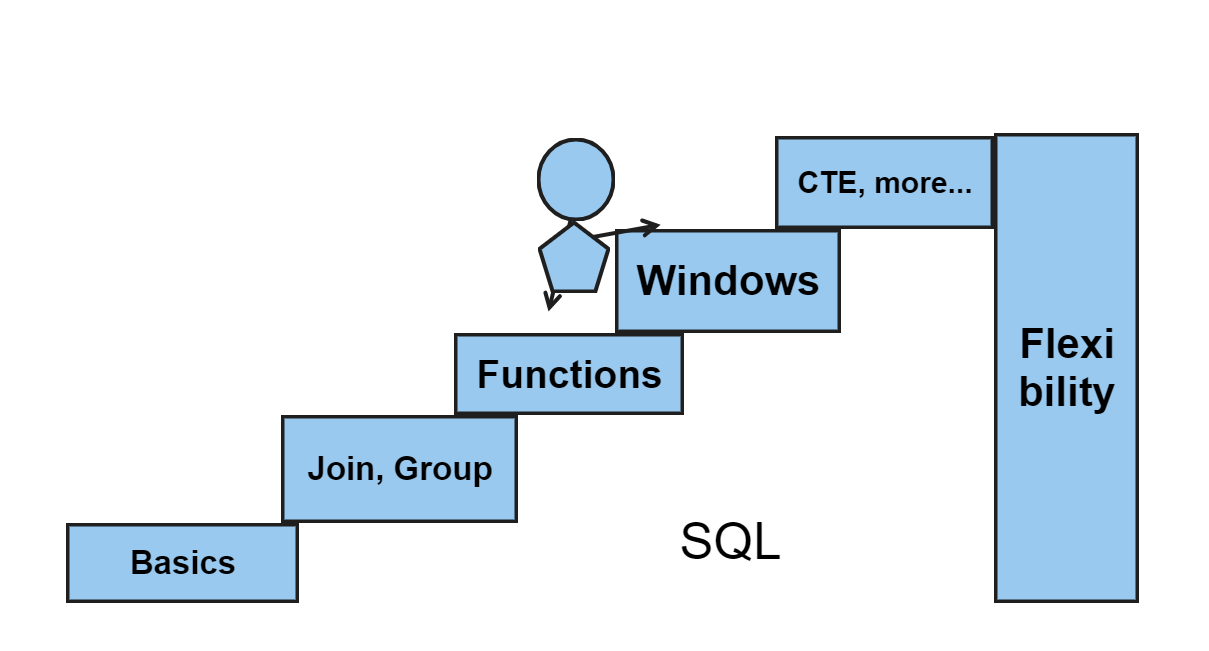

😁 I want to continue to have a more detailed framework for the query language branch.

Imaging I have several tables to extract data, what data do I want?

💪 The most frequent steps are:

Step 1. Choose tables with FROM

Step 2. Add conditions with WHERE (=, non-equals, BETWEEN, IN, LIKE)

Step 3. Choose columns with SELECT and simple aggregation {COUNT, MAX, MIN, AVG, SUM, DISTINCT}

Step 4. ORDER BY to sort out the rows and LIMIT to choose the specific amount from the beginning

👉 The possible scenes are:

Option 1. Merge columns into one table with JOIN {LEFT, SELF, FULL, CROSS} and ON (=), USING; Merge rows into one table with UNION {INTERSECT, EXCEPT}

Option 2. Classify all rows into groups for calculation and comparison {GROUP BY, HAVING}

Option 3. Add new aggregated or transformed columns in temporary WINDOWs and add new values to each row, WINDOW can be one if ignores the PARTITION BY

Aggregation functions MIN / MAX/ AVG/ SUM (…) OVER(PARTITION BY…)

Ranking in groups RANK()/ DENSE_RANK() OVER(PARTITION BY… ORDER BY…)

Move the value up or down LEAD/LAG (…, offset) OVER(ORDER BY…)

Create a WINDOW related to the current row OVER(ORDER BY… ROWS/RANGE/GROUPS BETWEEN {n /UNBOUNDED PRECEDING, CURRENT ROW} AND { n /UNBOUNDED FOLLOWING, CURRENT ROW})

💁♀️ The special techniques are:

Technique 1: Use () to combine queries, called subquery, the latter can use the output of the former one

Technique 2: Create Common Table Expression (CTE) to create a temporary table for simplification WITH table_name AS (……)

Technique 3: Define a Window then OVER it in SELECT clause {WINDOW window_name AS (PARTITION BY…)}

✌ With this, I can solve problems by combining them together. The more questions I solve, the more functions I will know, and the framework will be enriched.

Power BI Desktop

Introduction

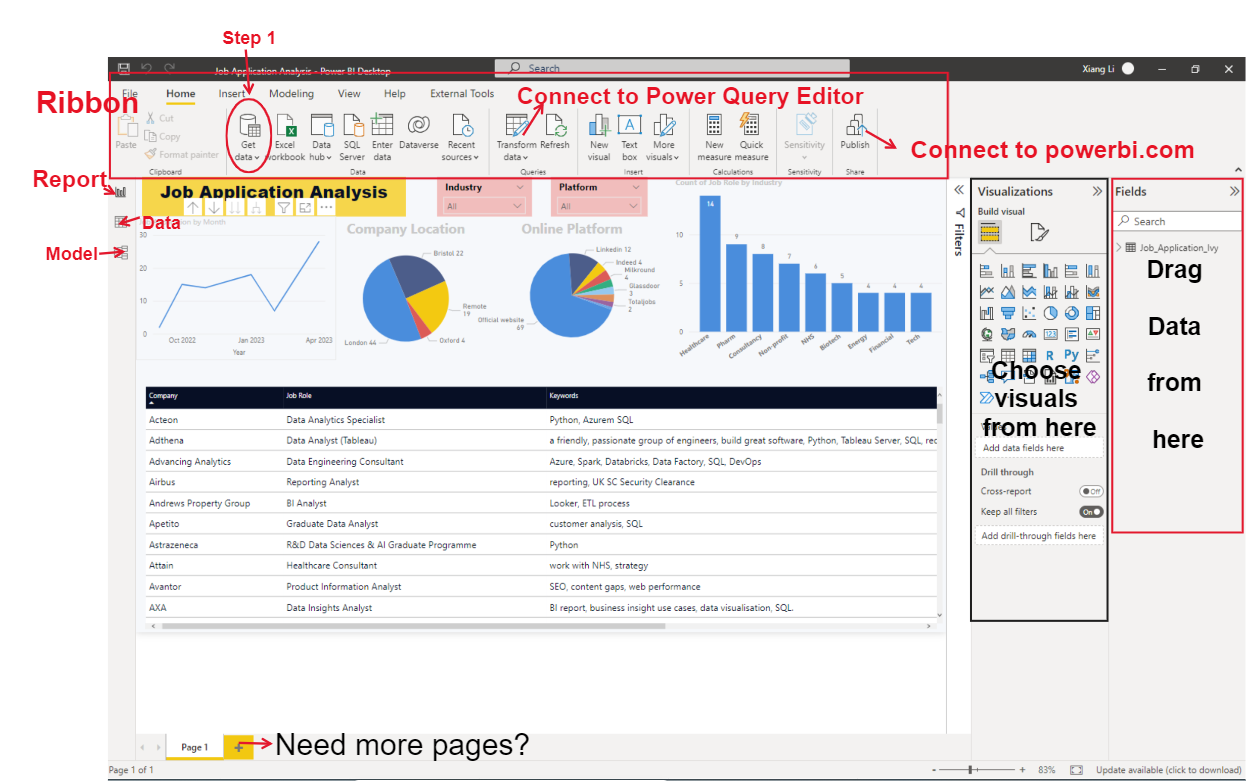

😜 Wish the sunshine coming back. Today, I want to explain the Power BI Desktop fundamentals concisely.

🤔 Why do we need Power BI? Because we need to tell stories to end users. Power BI can hold datasets and visualise them, showing massive information simultaneously on several pages.

🤗 So how does it work? Power BI Desktop has two main parts, the main interface (including report, data and model three layers) is for data modelling and visualisation. For the data transformation purpose, Power Query Editor support this part.

💁♀️ Then the common steps are:

Step 1: Get Data from connectors, they can be Files (e.g., Excel, CSV), databases (e.g., SQL Server), and URLs (e.g., SharePoint).

Step 2 (Optional): Sometimes, we do not have permission to change the raw data or our requirements are personalised. We need to adjust the data before loading. View column quality and distribution, Pivot or Unpivot, Split and so on.

Step 3: The model layer can build relationships among tables for joining tables together, and may add new measures or columns (it won’t change source data).

Step 4: Choose visuals on the right area, then drag data from fields into values, and may legend.

🙆♀️ That’s it, I hope it is helpful for my friends who want to learn a data visualisation tool.

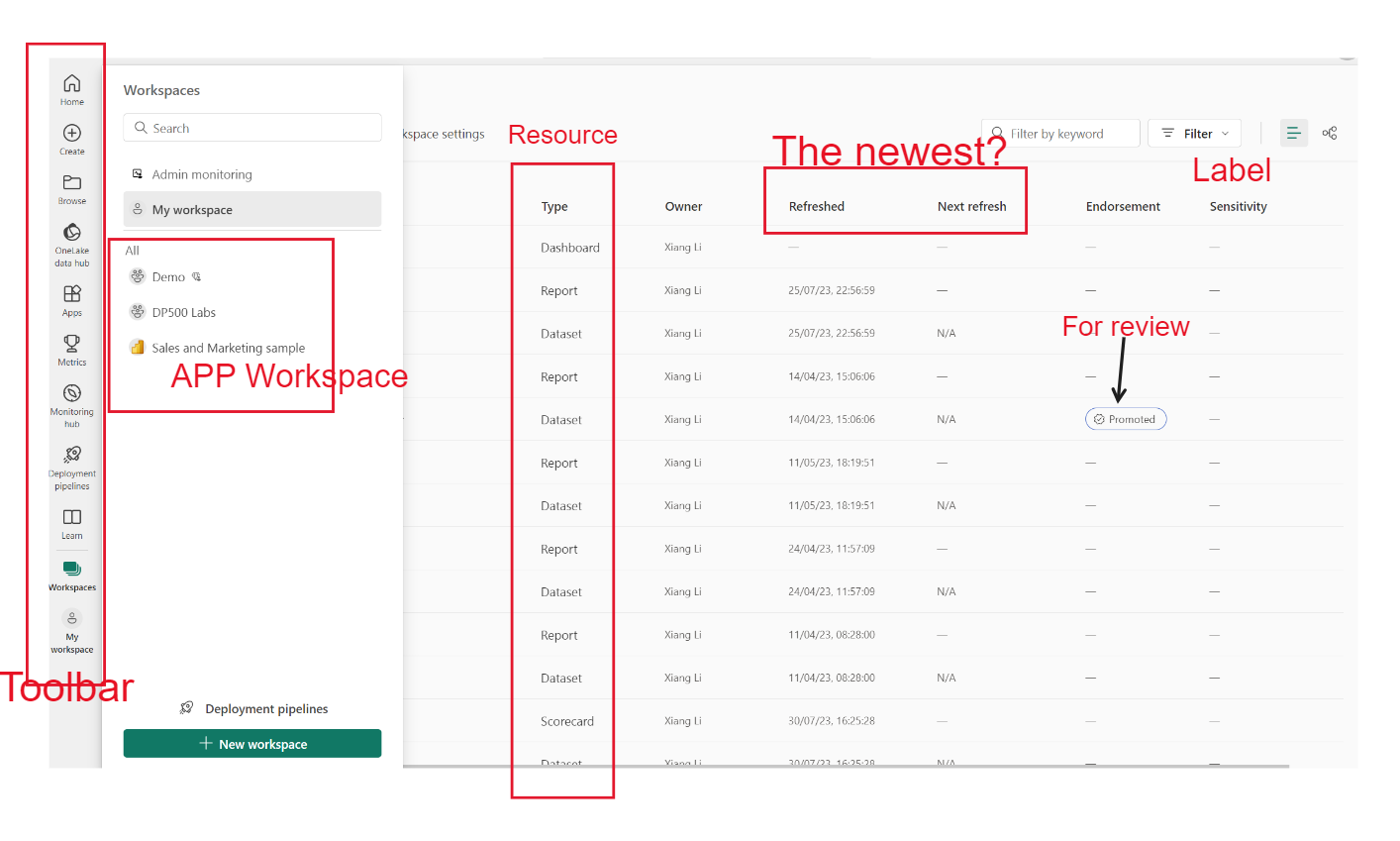

Power BI Service

Introduction

😘 At the beginning of another rainy week. I want to continue introducing the Power BI service.

🤔 𝐖𝐡𝐲 𝐝𝐨 𝐰𝐞 𝐧𝐞𝐞𝐝 𝐭𝐡𝐞 𝐏𝐨𝐰𝐞𝐫 𝐁𝐈 𝐬𝐞𝐫𝐯𝐢𝐜𝐞? Publish reports from the Desktop to the Service means moving reports from local to shared networking, others can also utilise our work.

💁♀️ 𝐒𝐨 𝐡𝐨𝐰 𝐝𝐨𝐞𝐬 𝐢𝐭 𝐰𝐨𝐫𝐤?

a. It is a web-based resource management platform.

b. It holds my workspace (highest private) and can also create APP workspaces to group and share resources under our account, each workspace can have datasets, reports, dashboards, dataflows, datamarts (premium tier: support Power Query Editor + SQL/Visual query + Report Building, provide Desktop experience), and scoreboards.

c. Premium tier also has the deployment pipeline function which can help development by staging workspaces.

d. These resources can be shared extensively via creating APPs.

👉 𝐓𝐡𝐞 𝐟𝐨𝐥𝐥𝐨𝐰𝐢𝐧𝐠 𝐪𝐮𝐞𝐬𝐭𝐢𝐨𝐧𝐬 𝐚𝐫𝐞: 𝐇𝐨𝐰 𝐭𝐨 𝐦𝐚𝐢𝐧𝐭𝐚𝐢𝐧 𝐚𝐧𝐝 𝐬𝐞𝐜𝐮𝐫𝐞 𝐝𝐚𝐭𝐚 𝐢𝐧 𝐭𝐡𝐞 𝐒𝐞𝐫𝐯𝐢𝐜𝐞 𝐰𝐡𝐞𝐧 𝐭𝐡𝐞 𝐝𝐚𝐭𝐚 𝐛𝐞𝐜𝐨𝐦𝐞𝐬 𝐡𝐚𝐥𝐟-𝐩𝐮𝐛𝐥𝐢𝐜?

Firstly, schedule refresh for the updated information and apply sensitivity labels for datasets if needed.

Secondly, for internal workers during the development process, grant them one of 4 roles with the least privilege to change the workspace: Viewer < Contributor < Member < Admin

Thirdly, for consumers or external users, grant them viewer roles for the published APPs.

Additionally, in one report, row-level security can make sure viewers only read the information they need.

😁 Hope this post can give you a general overview of the Power BI service and make it easier to explore it. Have a nice following week.

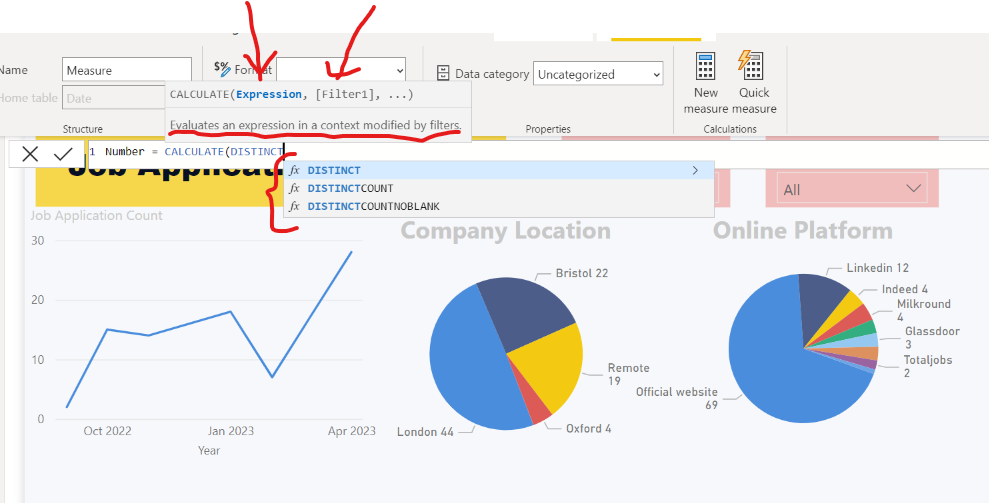

Power BI DAX

Functional Language

🤗 At the beginning of August, I want to introduce the core power of Power BI: DAX (Data Analysis Expression)

🤔 𝐅𝐢𝐱𝐞𝐝 𝐐𝐮𝐞𝐬𝐭𝐢𝐨𝐧: 𝐖𝐡𝐲 𝐝𝐨 𝐰𝐞 𝐧𝐞𝐞𝐝 𝐃𝐀𝐗? DAX, as its name, is mainly for Data Analysis, in other words, it provides more flexibility to handle analysis

💁♀️ 𝐒𝐨 𝐡𝐨𝐰 𝐝𝐨𝐞𝐬 𝐢𝐭 𝐰𝐨𝐫𝐤?

It calculates existing data to get new data with functions.

It has three final formats: measure, calculated column, and calculated table.

𝐌𝐞𝐚𝐬𝐮𝐫𝐞 is like a set of procedures to calculate; it will be calculated again when it is used in the report under context.

𝐂𝐚𝐥𝐜𝐮𝐥𝐚𝐭𝐞𝐝 𝐂𝐨𝐥𝐮𝐦𝐧 is added to the data table for each row, it would not influence the source data but it is kept in the model

𝐂𝐚𝐥𝐜𝐮𝐥𝐚𝐭𝐞𝐝 𝐓𝐚𝐛𝐥𝐞 is often used to create a date table for hierarchy (the results can be analysed by year, quarter, month, and day)

👉 𝐖𝐡𝐚𝐭 𝐚𝐬𝐩𝐞𝐜𝐭𝐬 𝐝𝐨𝐞𝐬 𝐢𝐭 𝐜𝐨𝐧𝐭𝐫𝐢𝐛𝐮𝐭𝐞?

The most common and complex one is Analysis using aggregation, logical, date, and filter functions

Active relationship when there are multiple foreign keys in one table with 𝐔𝐒𝐄𝐑𝐄𝐋𝐀𝐓𝐈𝐎𝐍𝐒𝐇𝐈𝐏( )

Simplify row-level security by combining the user identity table and 𝐔𝐒𝐄𝐑𝐏𝐑𝐈𝐍𝐂𝐈𝐏𝐀𝐋𝐍𝐀𝐌𝐄( )

🤓 𝐈 𝐰𝐢𝐥𝐥 𝐧𝐨𝐭 𝐥𝐢𝐬𝐭 𝐞𝐯𝐞𝐫𝐲 𝐟𝐮𝐧𝐜𝐭𝐢𝐨𝐧 𝐛𝐮𝐭 𝐬𝐡𝐚𝐫𝐞 𝐡𝐨𝐰 𝐭𝐨 𝐥𝐞𝐚𝐫𝐧 𝐢𝐭 𝐞𝐟𝐟𝐢𝐜𝐢𝐞𝐧𝐭𝐥𝐲.

Tip 1: Have a look at the official document (link: https://lnkd.in/e5nFmMH8) or Learn path (link: https://lnkd.in/ewSGXZyj). The document classifies DAX functions and provides examples, we will have a general idea about what functions they have.

Tip 2: Each function will be explained clearly in the 𝐓𝐨𝐨𝐥𝐭𝐢𝐩 when entering ‘(‘ after the function, read what it is and what parameters it needs.

Tip 3: Variables can be brought in when the measure becomes complex.

😊 Happy Power BI.

Apache Parquet

Efficient File Type

Features:

Columnar-storage

Compressed with the Dictionary or Run-Length Encoding (RLE) when the column has duplicate values

Dictionary suits for repetitive categorical data, e.g., music genres

RLE suits for Data with long sequences of the same value, e.g., consecutive 'Open' or 'Closed' for stores

Small size

Metadata stores schema

Strong Data Integrity

Support nested data structure

Storage:

Row Groups: each row group contains a set of rows for each column

Column Chunks: each column within a row group is stored in a "chunk"

Encoding and Offsets: Dictionary or Run-Length Encoding (RLE)

Metadata: describes the structure of the data, including the schema and the encoding used for each column

Reconstruction During Reading: the process above reverses

A Simple Metadata Examples:

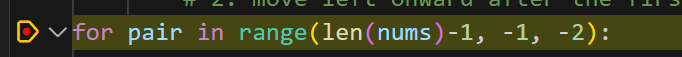

Python

List Key Tools of Python

Data Structures - Set

my_set = {1, 2, 3}

Unordered, no index

Unique Elements

Heterogeneous, can contain integers, strings, tuples and so on at the same time

Immutable Elements, can not be changed but can be added or removed

Not require contiguous memory, hash table structure (an array of 'buckets')

Use cases:

membership testing

remove duplicates

operations, like unions

|, intersection&, difference-, symmetric difference^, issubset( ), isdisjoint( )

my_set = {1, "hello", (2, 3)} #integer, string and tuple

another_set = set([4, 5, 6]) #set function converts a list into a set

# add elements

my_set.add(4)

# remove elements

my_set.remove(4)

# membership testing, O(1)

2 in my_set

# set operations

union_set = my_set | another_setData Structures - Tuple

my_tuple = (1, "hello", 3.14)

0-based Indexing, access elements by their position with `[ ]`

Heterogeneous Data contain different data types, including other tuples, lists, and dictionaries

Immutable Tuple, once a tuple is created, elements of it can not be added, changed, or removed, but elements themselves can be modified if they are mutable objects

Contiguous Memory

Use cases

Return values in function

Immutable Data Collection

Represent a row with columns as elements for databases

Dictionary keys

my_tuple = ([1, 2], "hello")

my_tuple[0].append(3)

print(my_tuple) # Output: ([1, 2, 3], "hello")

# tuples without parentheses

another_tuple = 1, 2, 3

# single-element tuples

single_element_tuple = (1,)

# complicated tuples

book = (

{"title": "1984", "author": "George Orwell", "publication_year": 1949},

["Dystopian", "Political Fiction", "Social Science"],

(True, "John Doe")

)#Accessing book information

print(f"Title: {book[0]['title']}")

print(f"Author: {book[0]['author']}")

print(f"Genres: {', '.join(book[1])}")

print(f"Is Borrowed: {'Yes' if book[2][0] else 'No'}")Data Structures - Arrays Build-in

int_array = array('i', [1, 2, 3]) # Array of integers (less used)

Created using the

arrayclass fromarraymodule0-Based Indexing

Homogeneous, the type of elements is determined by a type code

iMutable, can modify the elements, append, or remove

Contiguous Memory

Store the data values themselves in the contiguous block (memory efficient)

Use case

large numeric datasets

binary I/O operation

from array import array

int_array = array('i', [1, 2, 3])

print(int_array)

# Output is array('i', [1, 2, 3, 4, 5])

# access elements with index

print(int_array[0])

# Output is 1

# add new element

int_array.append(4)

print(int_array)

# Output is array('i', [1, 2, 3, 4, 5, 4])Data Structures - 2D Arrays (Matrix)

my_numpy_array = np.array([[1, 2, 3], [4, 5, 6]])

Support Multidimensional Indexing, access elements using a comma-separated tuple of indices

Homogeneous, the same data type (improve efficiency)

Mutable, modifying elements or reshaping it

Contiguous Memory, is a fixed size determined at the time of creation

Use case

Matrix Computation

Scientific Computing

Linear Algebra

Large datasets

import numpy as np

# create a 2D array (matrix)

matrix = np.array([[1, 2, 3], [4, 5, 6], [7, 8, 9]])

# access elements with index

row2_column3 = matrix[1, 2]

# change the elements

matrix[1, 2] = 20

# matrix computation

matrix_a = np.array([[1, 2], [3, 4]])

matrix_b = np.array([[5, 6], [7, 8]])

matrix_sum = np.add(matrix_a, matrix_b)

# Matrix multiplication

matrix_product = np.dot(matrix_a, matrix_b)Data Structure - Lists Build-in

my_list = [1, "Hello", 3.14, [2, 4, 6]]

- 0-based Indexing and slicing

- Multidimensional List

- Heterogeneous Data Types

- Mutable

- Dynamic resizing and memory overhead (e.g.,

appendremoveinsertpop) - Concatenation (+) and Repetition (*)

- Sorting and Reversing

#Creating a list and adding elements

my_list = [1, 2, 3]

my_list.append(4) # Add at the end

my_list.insert(1, 'a') # Insert at index 1#Accessing elements

print("First element:", my_list[0]) # Access first element#Modifying elements

my_list[2] = 'b' # Change the element at index 2

#Removing elements by value and by index

my_list.remove('a') # Remove the first occurrence of 'a'

popped_element = my_list.pop(2) # Remove and return the element at index 2

#Slicing

sublist = my_list[1:2] # Elements from index 1

#Concatenation and repetition

another_list = ['a', 'b']

con_list = my_list + another_list

rep_list= my_list * 3

#Sorting and reversing

my_list.reverse()

con_list.sort(key=str) # Convert all elements to strings

# Multidimensional list

matrix = [[1, 2, 3], [4, 5, 6], [7, 8]]Positional and Named Parameters

Positional parameters are based on the order in which I provide them.

def create_pizza(cheese, tomatoes, olives):

return f"Created a pizza with {cheese} cheese, {tomatoes} tomatoes, and {olives} olives."

# The sequence of parameters matters

my_pizza = create_pizza("mozzarella", "cherry", "green")

print(my_pizza)To compare, Named (Keyword) Parameters

def create_pizza(cheese, tomatoes, olives):

return f"Created a pizza with {cheese} cheese, {tomatoes} tomatoes, and {olives} olives."

my_pizza = create_pizza(tomatoes="cherry", cheese="mozzarella", olives="green")

print(my_pizza)Class

class Solution: def My_Method(self, word1: str, word2: str) -> str:

A blueprint for creating objects

Group data (Class attributes and Instance attributes) and functions (methods)

Each method in a class must have

selfas its first parameter, which refers to the instance of the classCreate an instance from the defined class, my_solution = Solution( )

#how to use class and methods included

#Create an instance of Solution, which is my_solution

my_solution = Solution()

result = my_solution.My_Method("abc", "pqr")

print(result)Debug Methods

Debugger VScode (what is going on inside of the code?)

The common tool is print(), especially for the loop, it is easy to check the changes in each loop

VSCode Debugger is a powerful tool to check the program flow:

Step 1: Install Python Extention to enhance the RUN AND DEBUG feature

Step 2: Open the folder and create a 'launch.json' file for future customisation

breakpoint: where to pause the execution

Debug Cell (More near Execute Cell icon)

The following sequence is Continue, Step Over, Step Into, Step Out, Restart, Disconnect

- Continue: continue execution of this line until the end of the code cell

- Step Over: execute this line of code then pause execution at the next line of code

- Step Into: dive into this line of code and see details like what functions this line of code borrowed from other places in the same cell

- Step Out: jump out from the function inside and back to the main

- Restart: restart the debugging

- Disconnect: end the debugging

Key Points in Computer Science

A short summary for key concepts in CS50

BITS, BYTE

Pre-study

Data is saved as 1 and 0 (i.e., the binary system) in a computer

BIT (BINARY DIGIT): The smallest unit, i.g., 1 or 0

Bytes: = 8 BITS, i.g., 01011011

KB (KILOBYTE): =1,024 BYTES i.g., space for around 1/3 Page of Text

MB (MEGABYTE): = 1,024 KB, i.g., space for 1 book, 1 photo, 1 minute of music

GB (GIGABYTE): = 1,024 MB

TB (Terabytes): = 1,024 GB

...

The number of values that BIT can represent is 2^ (the number of BITS) - 1

Data Types (C Programming Language)

CS50 Week1 C

int: integer, 4 bytes of memory (32 bits), The biggest number that the binary system can represent (32-bit) is 11111111,11111111,11111111,11111111 which is 2147483647 (considering the negative number). The range is -2^31 to 2^31-1

unsigned int: double positive range but disallow negative value. The range is 0 to 2^32-1

long: 8 bytes of memory (64 bits). The updated version of int. Larger range.

char: single character, 1 byte of memory (8 bits), ASCII maps numbers to these characters, i.g., for A: 0100 0001. The range is -2^7 to 2^7-1`

float: floating points, 4 bytes of memory (32 bits), the precision is restricted

double: floating points, 8 bytes of memory (64 bits), more precise

bool: true or false

string: an array of characters

Command Line

CS50 Week 1 C

clear (Ctrl + l): clear the screen

ls: list all files (executable file, text, folder and so on) in the current directory

pwd: present working directory

cd <directory>: change directory

cd home directory

cd . current directory

cd .. parent directory

mkdir <directory>: make directory

cp <source> <destination>: duplicate source file and place it in destination (can create a new destination at the same time)

- cp -r <source directory> <destination directory> duplicate the entire directory and put it in the destination directory

rm <file>: remove files

rm -f <file>: skip the confirmation step

rm -r <directory>: remove the entire directory

rm -rf <directory>: directly delete the entire directory

- mv <source> <destination>: can rename files

Sort Algorithms

CS50 Week 3 Algorithms

O( ) the possible longest runtime

Ω( ) the possible shortest runtime

Selection Sort: go through all numbers, find the smallest number then swap the left of searching with the smallest number O(n^2) Ω(n^2)

Bubble Sort: compare the numbers which two are close to each other, then make sure the smaller one is on the left and the larger one is on the right O(n^2) Ω(n)

Merge Sort: divide the whole into two halves, sort the two halves, and then merge the two halves by comparing numbers from the smallest of the two, the key is to divide the cohort into two halves recursively until the recursively half only has one number O(n log n) Ω(n log n)

Memory Address - Hexadecimal

CS50 Week 4 Memory

Hexadecimal is a base-16 number system

0 1 2 3 4 5 6 7 8 9 A B C D E F

- Hexadecimal is like a shorthand for binary, 1111 means F

- Add 0x before when it represents a memory address, e.g., for an integer, 0x7ffc3a7cffbc

- Color Code #FFFFFFF means Red 255 Blue 255 Green 255

Memory Address - Pointer C

CS50 Week 4 Memory

The pointer is the address of data in memory

int main() {

// A regular integer variable

int var = 10;

// A pointer variable that can hold the address of an int

int *ptr;

// Store the address of var in ptr

ptr = &var;

// Prints the value of var

printf("Value of var: %d\n", var);

// Prints the memory address of var

printf("Address of var: %p\n", &var);

// Prints the memory address stored in ptr

printf("Value of ptr: %p\n", ptr);

// Dereferences ptr and prints the value of var

printf("Value pointed to by ptr: %d\n", *ptr);

return 0;

}Data Structure - Arrays C

int arr[3] = {10, 20, 30} //example

- Linear Data Structure

- Same Data Type

- Value means actual data, Index means relative location, starts from 0

Fixed Size - Occupy the consecutive memory

Specify the number of elements the array will have

int arr[3]

Single Pointer

//C code

#include <stdio.h>

int main() {

int arr[3] = {10, 20, 30};

// Pointer to the first element of arr

int *ptr = arr;

for(int i = 0; i < 3; i++) {

// Accessing array elements using pointer arithmetic

printf("%d ", *(ptr + i));

}

return 0;

}Acronyms for Web Development

DHCP (Dynamic Host Configuration Protocol): Assign an IP address to the host automatically

IP (Internet Protocol): Address of each device to communicate on the Internet

IPv4 (Internet Protocol Version 4): 32-bit number, range [0, 255], e.g., 192.168.0.1

IPv6 (Internet Protocol Version 6): 128-bit number, range [0, 32 undecillion], e.g., 2001:0db8:85a3:0000:0000:8a2e:0370:7334

DNS (Domain Name System): Translate IP addresses to more memorable names that are more human-comprehensible

TCP (Transmission Control Protocol): Direct the transmitted packet to the correct program on the receiving machine

HTTP (Hypertext Transfer Protocol): Dictate the format of requests (GET, client to server) and returns (server to client)

HTML (Hypertext Markup Language): Use angle-bracket enclosed tags to define the structure of a web page semantically

CSS (Cascading Style Sheets): Customise websites' look and feel, a styling language, can be in the style tag, can also link .css file

JavaScript: Make the web page interactive, <script src="script.js">

DOM (Document Object Model): Organize the web page with objects (properties and methods)

API (Application Programming Interface): Exchange data and perform actions across different platforms using requests and responses

JSON (JavaScript Object Notation): A list of dictionaries, or dictionaries, or arrays, or combination of dictionaries and arrays

jQuery: JavaScript library which can simplify syntax, uses <script> and CDN to load and $ at the beginning serves as the way to access all features.

CDN (Content Delivery Network): <script src="https://code.jquery.com/jquery-3.6.0.min.js"> for jQuery

AJAX (Asynchronous JavaScript and XML): Allow web pages to update dynamically (part of the web page without full-load) by asynchronously exchanging data with a server (in the background)

Certification

Building Expertise through Accumulated Certifications

20/06/2024

Google Cloud Platform Professional Data Engineer

BigQuery

Cloud Storage

Dataflow

CloudSQL

Cloud Composor

11/07/2023

Azure Data Engineer Associate

Data Pipeline

Streaming Analysis

Data Store, Movement and Transformation

Data Encryption

Databricks, Data Factory, Gen2

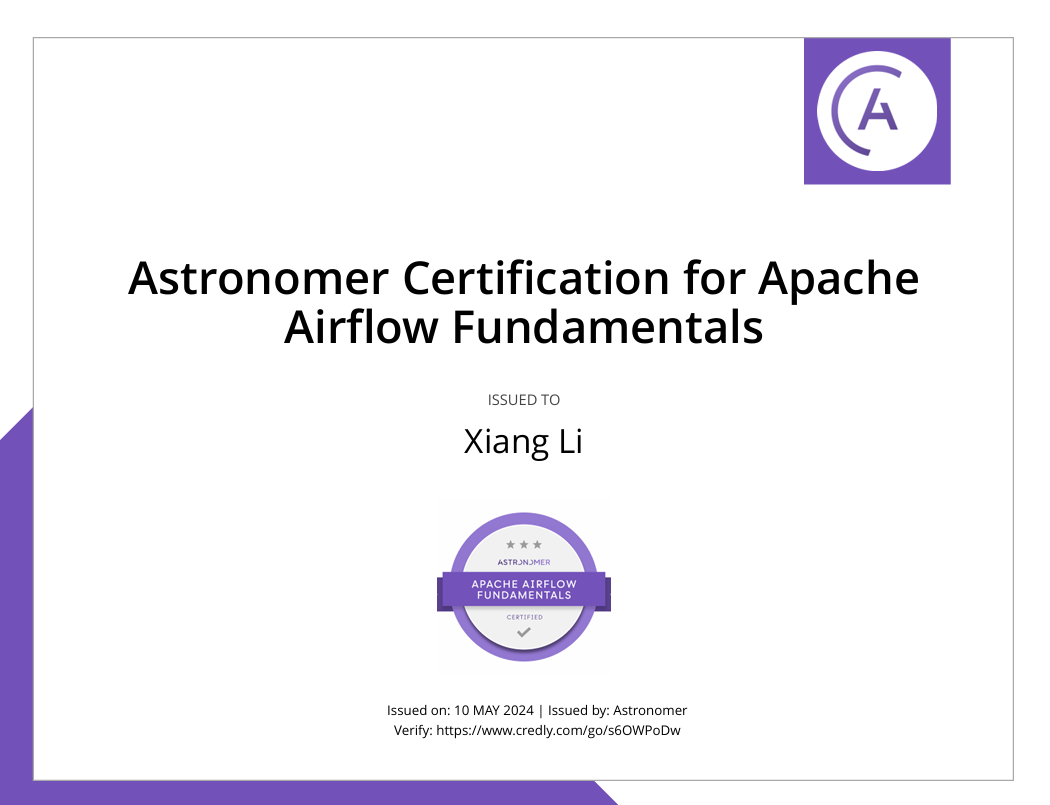

10/05/2024

Apache Airflow Fundamentals

Orchestration

Scheduling

Dataset

DAGs

Docker

Web Server

19/04/2024

Data Engineering Bootcamp

Data Pipeline

Data Warehousing

Analytics Engineer

Terraform

Bash

Docker

Python

08/08/2024

dbt (Data Build Tool) Bootcamp

Modelling

Materialisation

Documentation

Macro

test

Snowflake

09/04/2023

Power BI Data Analyst Associate

Power BI Desktop

Power BI Service

Prepare Data

Model Data

Visualise and Analyse

Deploy and Maintan

11/06/2023

Azure Enterprise Data Analyst Associate

Azure Synapse Analytics

Power BI

Microsoft Purview

Performance Optimisation

26/03/2023

Azure Fundamentals

Azure

Cloud Data

Cloud Networking

Cloud Security

Cloud Services

Cloud Storage

13/04/2022

Google Data Analytics

Spreadsheets

Tableau

R

SQL

13/01/2023

Data Analyst Professional

SQL

R

Case Study

Presentation

17/03/2023

Data Science Bootcamp

Query databases

Design databases

Data Views

Transactions

Analytical Functions

Big Data

21/01/2023

Lakehouse Fundamentals

- Databricks